Background

About Kellogg

Kellogg Company is the world’s leading cereal company, second largest producer of cookies, crackers, and savory snacks, and a leading North American frozen foods company. With 2015 sales of \$13.5 billion, Kellogg produces more than 1,600 foods across 21 countries and markets its many brands in 180 countries.

Driving Revenue with Customer Logistics Data

Kellogg relies on customer logistics data to make informed decisions and improve efficiencies around shopping experiences. Accuracy and speed of such data is directly tied to the profitability of Kellogg’s business.

Leveraging In-Memory for Faster Access to Data

Making data readily available to business users is top-of-mind for Kellogg, which is why the company sought an in-memory solution to improve data latency and concurrency.

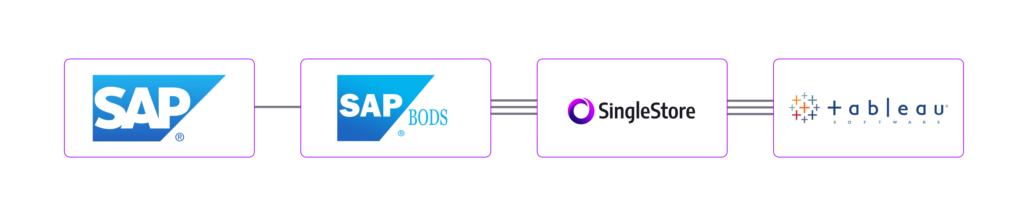

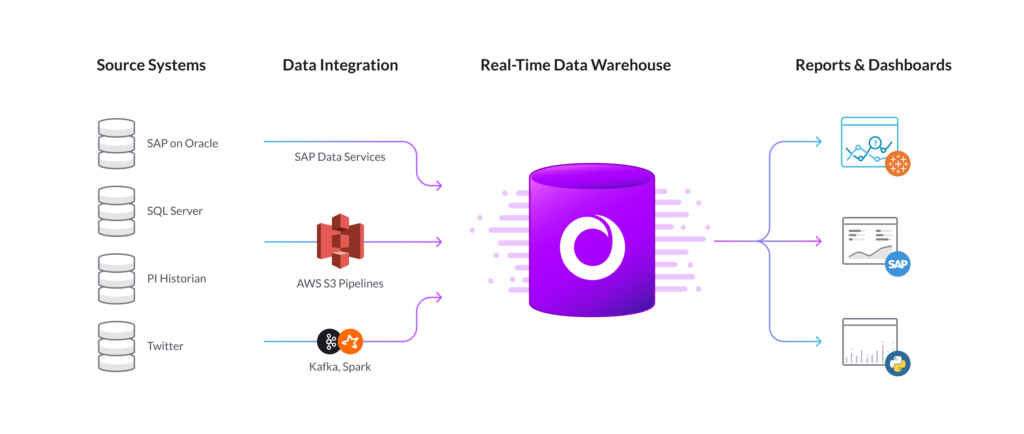

Starting with an initiative to speed access to customer logistics data, Kellogg turned to SingleStore to make its 24-hour ETL process faster. In an interview at Strata+Hadoop World, JR Cahill, Principal Architect for Global Analytics at Kellogg said:

“We wanted to see how we could transform processes to make ourselves more efficient and start looking at things more intraday rather than weekly to make faster decisions.”

Results

Reducing Latency from 24-Hours to Minutes

JR and team scaled up their SingleStore instance in AWS and within two weeks reduced the ETL process to an average of 43 minutes. On top of that, the team added three years of archiving into SingleStore, a feat not possible with their previous system, while maintaining an average ETL of 43 minutes.

Making Use of External Data

Kellogg expanded its use of AWS and SingleStore to include 3rd party data, including twitter analysis along with additional enterprise data subject areas. The ease of integrating additional EC2 nodes into the SingleStore cluster supported the scale Kellogg required for new user concurrency and data volumes. Additionally, SingleStore provided Kellogg the ability to leverage AWS S3 and Kafka through the SingleStore data ingestion pipelines. Kellogg can now continuously ingest data from AWS S3 buckets and Apache Kafka for up-to-date analysis with exactly-once semantics for more accurate reporting.

Making Data More Accessible in Tableau

Kellogg uses Tableau as a business intelligence and data visualization tool throughout its entire organization. By integrating Tableau with SingleStore, Kellogg was able to run visualizations directly on top SingleStore rather than running an extraction. By doing so, Kellogg realized a 20x improvement on analytics performance. This allows hundreds of business users to concurrently access up-to-date data and increase the profitability of customer logistics data.

JR summarizes the benefit, saying:

“We have to be able to provide business value on each and every project. Because every business user is requesting speed and the ability to move at an incredibly iterative pace, we need to be able to provide that and in-memory allows us to do so.”

Learn more from our customers or try SingleStore.