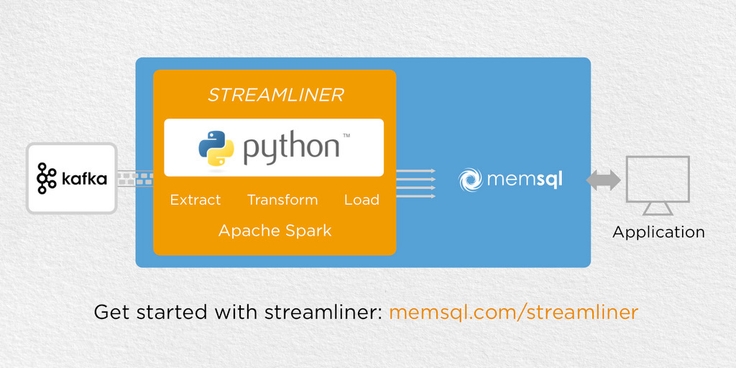

This month’s SingleStore Ops release includes performance features for Streamliner, our integrated Apache Spark solution that simplifies creation of real-time data pipelines. Specific features in this release include the ability to run Spark SQL inside of the SingleStore database, in-browser Python programming, and NUMA-aware deployments for SingleStore.

We sat down with Carl Sverre, SingleStore architect and technical lead for Ops development, to talk about the latest release.

Q: What’s the coolest thing about this release for users?

I think the coolest thing for users is that we now support Python as a programming language for building real-time data pipelines with Streamliner. Previously, users needed to code in Scala – Scala is less popular, more constrained, and harder to use. In contrast, Python syntax is widely in use by developers, and has a broad set of programming libraries providing extensibility beyond Spark. Users can import Python libraries like Numpy, Scipy, and Pandas, which are easy to use and feature-rich compared to corresponding Java / Scala libraries. Python also enables users to prototype a data pipeline much faster than with Scala. To allow users to code in Python, we built SingleStore infrastructure on top of PySpark and also implemented a ‘pip’ command that installs any Python package across machines in a SingleStore cluster.

Q: Why focus on Python, the “language of data science”?

We believe that Python is the way most generalist developers know how to write code. In fact, here at SingleStore, much of the software we write outside of the core database is written in Python – this includes Ops, our interactive management tool; Psyduck, the code testing tool; and much more. We know that developers experimenting with their own data pipelines (many using Streamliner) want to get up and running quickly. Naturally, they seek the most intuitive programming language to do so: Python!

Q: Can you dig into SQL pushdown on a technical level?

Spark SQL is the Apache Spark module for working with structured data. As an example, with Spark SQL you can do a command like:

customersDataFrame.groupBy("zip_code").count().show()

It counts customers in a Spark data table (dataframe) grouped by zip code. The same query can also be run through Spark SQL, for example:

sqlContext.sql(“select count(*) from customers group by zip-code”)

Now, we can boost Spark SQL performance by pushing the majority of computation into SingleStore. How do we do it? First, we process the Spark SQL operator tree and turn it into SQL syntax that SingleStore understands, then execute that query directly against the SingleStore database, where performance on structured data is strictly faster than Spark. By leveraging the optimized SingleStore engine, we are able to process individual Spark SQL queries much faster than Spark can.

Q: What are you most proud of in this release?

I am most proud of the performance boost from running Spark SQL in SingleStore. It is awesome that we can leverage the SingleStore in-memory, distributed database to boost Spark performance without users having to change any of their application code. With SingleStore, Spark SQL is just faster.

Get The SingleStore Spark Connector Guide

The 79 page guide covers how to design, build, and deploy Spark applications using the SingleStore Spark Connector. Inside, you will find code samples to help you get started and performance recommendations for your production-ready Apache Spark and SingleStore implementations.

Download Here

.png?auto=webp&width=320&disable=upscale)