Predictive analytics, machine learning, and AI are being used to power interactive queries and outstanding customer experiences in real time, changing how companies do business. SingleStore is widely used to help power these advanced applications, which require fast access to recent data, fast processing to combine new and existing information, and fast query response. In this webinar, Eric Hanson, Principal Product Manager at SingleStore, shows how SingleStore customers are using this fast, scalable SQL database in cutting-edge applications. You can read this summary – then, to get the whole story, read the transcript, view the webinar, and access the slides.

Mapping SingleStore to Development and Deployment

Eric describes how SingleStore helps at each step as you build and run machine learning models:

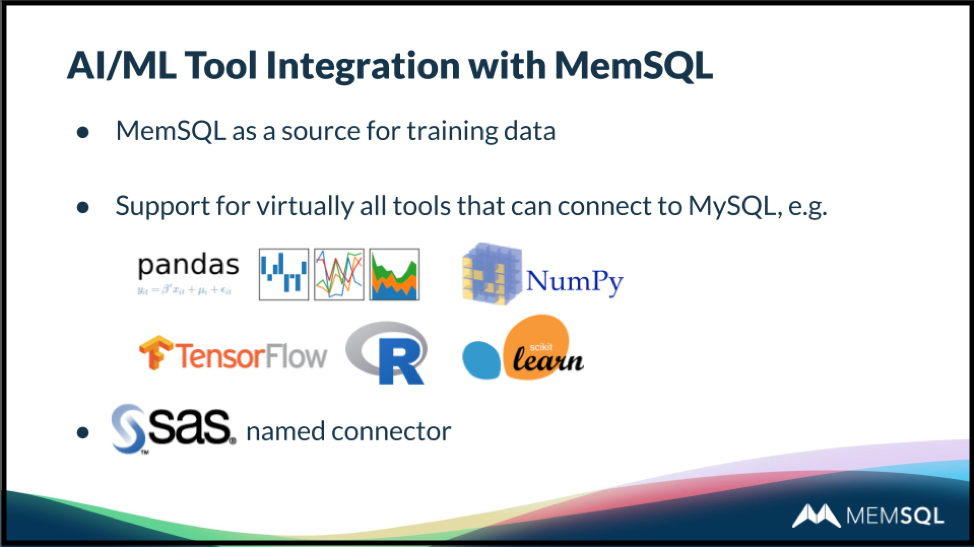

- Training models. You can use SingleStore as a fast, scalable source for training data, accessing the data via standard SQL. With SingleStore, you can complete many more training runs in less time.

- Integration with machine learning and AI tools. A wide range of machine learning and AI tools use the MySQL wire protocol, which is supported directly in SingleStore. So they connect directly to SingleStore as well. This includes the Python data analysis library pandas, the scikit-learn Python library, NumPy, the R language, SAS analytics, and the TensorFlow machine learning platform.

- Fast ingest. SingleStore accepts streaming data, for real-time ingest, or bulk uploads from a very wide range of sources.

- Scoring on load. As you load data through a SingleStore Pipeline, you can transform data with a Python script or any executable code that you create. This allows you to, for example, compute a “score” column from existing, input columns very fast, during the load process.

Using these features together supports high productivity during development and very fast execution in production. Developers use their accustomed, purpose-built machine learning and AI tools, and are able to build and test models separately from the production pipeline.

In production, SingleStore’s scalability, very fast ingest, and fast execution of pre-written, pre-tested Python scripts and pre-compiled executable code support very high performance at scale. Systems that have feedback loops benefit greatly as the benefits of fast ingestion and fast execution compound exponentially with time.

Mapping SingleStore Features to Machine Learning and AI

Whereas the overall philosophy of SingleStore is to integrate well with existing tools, there are a few SingleStore capabilities that further boost machine learning and AI use cases. Eric describes them:

- Scalability. Operationalizing machine learning and AI programs is difficult enough, given the ongoing focus on research and development, over implementation, in these areas. It’s even more challenging when your shiny new ML pipeline can’t scale to match demand. Because SingleStore is fully distributed – for ingest, transactions, and analytics – it serves as the solution for a wide range of scalability problems, including this one.

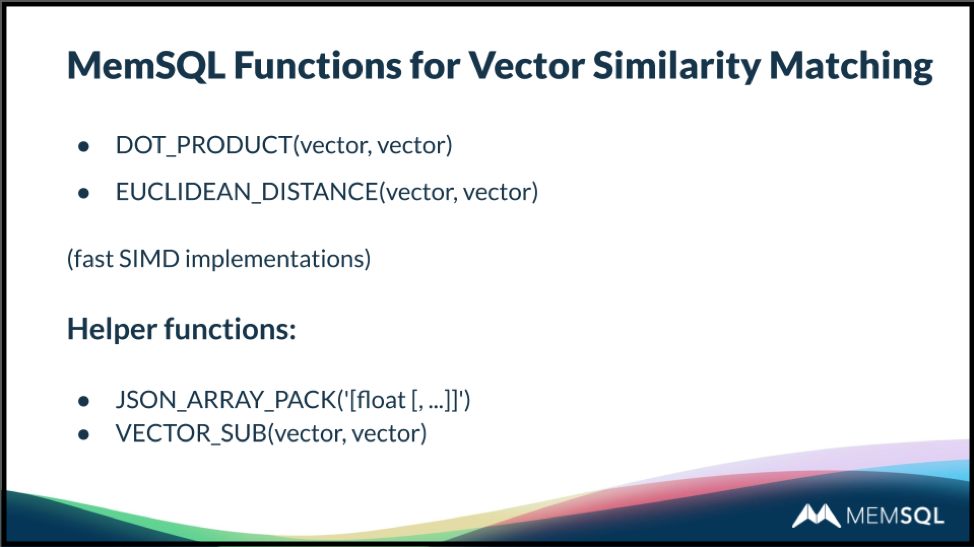

- Vector functions. SingleStore has a few functions that are especially useful for vector similarity matching, producing amazingly fast execution of complex operations at scale. DOT_PRODUCT for two vectors and EUCLIDEAN_DISTANCE for two vectors are highly useful, specific functions. JSON_ARRAY_PACK for a floating point number and an array, and VECTOR_SUB for two vectors, are helpful supportive functions.

- ANSI SQL support. AI is often seen as a scientific function, isolated from business concerns. The full ANSI SQL support in SingleStore is highly useful operationally and can also serve as a bridge between the AI group and the business side.

Approving Credit Card Swipes in 50ms of Processing Time

Despite years of hype, it’s still early days for real-world implementations of machine learning models and AI programs in production applications. As deployments increase, SingleStore is being used for an ever-widening range of applications. (Streaming technologies such as Kafka and Spark are often deployed for the same purpose, despite the degree of change to data flows, skills, and vendor relationships required to take advantage of them.)

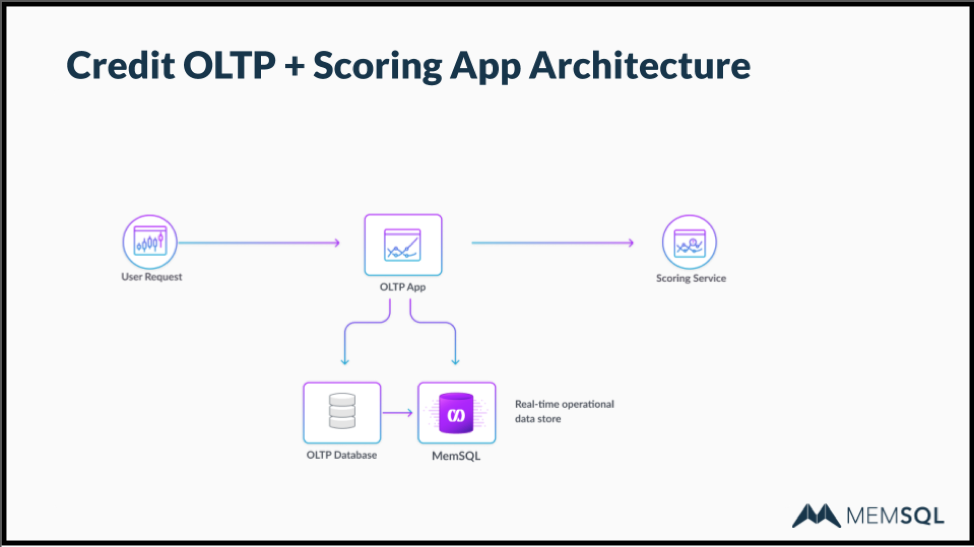

One example is a credit card fraud detection application developed by a major US bank. Fraud detection used to be a batch process that ran at night, allowing many illicit purchases to be made on a stolen card or card data. But this bank is implementing fraud detection “on the swipe,” with approval decided within one second of the swipe – most of which is taken up by data transmission time. Only with SingleStore have then been able to assemble, process, and make a decision on their 70-feature model in real time.

Conclusion

For research and development, SingleStore is free to use for workloads up to 4 nodes (typically, 128GB of RAM), with much larger on-disk data sizes possible. When running SingleStore without paying, you get community support through the SingleStore Forums. To run larger workloads, and to receive dedicated support (as many organizations require for production), contact SingleStore for an enterprise license.

SingleStore’s connectivity, capabilities, and speed make it a solid choice for machine learning and AI development and deployment. For more information you can read the transcript, view the webinar, and access the slides. Or, download and run SingleStore for free today!