With the launch of SingleStoreDB Self-Managed 6.5, we’ve added several new features to advance performance, accelerate time-to-insight, and simplify operations. With the goal of making SingleStore not only the world’s best database, but truly the easiest database to use.

Many customers choose SingleStore for its potential to scale, but oftentimes a workload can scale in unanticipated ways at unexpected times. Problems can manifest as temporary spikes in load, skew, or concurrency.

In SingleStoreDB Self-Managed 6.5, we added workload management to more gracefully handle the unpredictable spikes in queries, while still maintaining high performance during normal load.

In this blog post, I will walk through a few examples that workload management will improve. Then I’ll explain the system design that allows us to handle those cases. And finally, I’ll share some benchmarks showing the effect workload management can have.

Maybe This Sounds Familiar

Here is an example scenario where a customer might experience unanticipated load. Suppose we have a columnstore purchases table sharded on item_unique_id. During a one hour flash sale, a retailer sells an unexpected number of one specific item, generating an overwhelming amount of logging data for the item and creating temporary ingest skew on the associated node. Though the other nodes are running fine, queries on the skewed node get further and further behind, and eventually result in downtime of their website, losing them sales.

Workload management is able to solve the problem by limiting key resource-intensive queries until the node has a chance to get back to a normal load.

How Workload Management Works

Workload management can be broken into three components that work together to address cases of heavy load: Detection, Prediction, and Management.

Detection refers to identifying when any node is struggling. SingleStore differentiates between memory used for table data and memory used temporarily in queries to determine if it is safe to continue forwarding more queries to the target nodes.

Prediction refers to estimating the resource usage of queries and classifying them into groups based on memory usage and expected runtime. In SingleStoreDB Self-Managed 5.8, we introduced management views to allow users to see resource usage statistics of previously-run queries. Workload management uses these same statistics to determine how resource intensive each query is from a memory consumption perspective.

The last component is Management, which admits queries in three tiers. The cheapest queries such as single-partition selects, inserts, or updates avoid being queued entirely. Queries that use moderate resource amounts are queued on a FIFO (first-in first-out) basis at a rate dependent on the highest load among leaves.

Lastly, the most expensive queries are queued with a maximum concurrency limit and the Master Aggregator decides when each will run. Since there is a latency cost to the coordination, only the most resource intensive queries fall into this category.

Putting it into our retailer example from before, SingleStore would first detect the struggling node. Queries that could be predicted to be fast and cheap would still complete with no regression in latency. The rate of expensive queries would be scaled down to compensate and allow the leaf to keep up.

Once the leaf was able to free up more memory, the expensive queries could resume their normal rate of admission and run freely. In the context of our example online retailer, once the load spike finished, their memory-intensive analytical queries would return to their previous level of performance.

Workload Management Benchmark

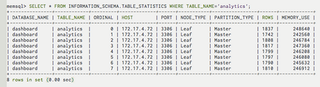

We can compare individual query performance between SingleStore with the workload management feature turned off versus on when spiking to 4x normal concurrency.

In our benchmark we tested 8 concurrent users (normal concurrency) and 32 concurrent users (spike concurrency). We used a SingleStore configuration with 1 core per partition to ensure that even the normal concurrency mode would be bottlenecked on CPU. Each user would send queries to SingleStore in a random order with 1 in 25 of the queries being expensive (high cardinality distributed group-by) and the rest cheap (various low cardinality group-by and distributed joins).

Query latency with concurrency spike:

| Workload Management Off | Workload Management On | |||

|---|---|---|---|---|

| Cheap Query Average Latency | Expensive Query Latency | Cheap Query Average Latency | Expensive Query Latency | |

| Normal Concurrency | 2.7 sec | 37 sec | 2.5 sec | 20 sec |

| 4x spike concurrency | 7.5 sec | Queries Fail due to running out of Memory | 6.4 sec | 115 sec |

Under normal concurrency, workload management schedules the expensive queries more efficiently, providing a slight improvement to latency overall. Another side effect of enabling workload management is that SingleStore actually scales better than the factor by which we scaled concurrency on the more latency-sensitive cheap queries (56% better) with a tradeoff with the expensive query (44% worse). In contrast, without workload management, the concurrency spike causes expensive queries to fail while also slowing down cheaper queries.

We’re excited about this new feature, and think it will help you manage your workloads more effortlessly than before. Go ahead and try SingleStoreDB Self-Managed 6.5, or read more about what’s new in our blog.